How to Edit robots.txt in Grigora

The robots.txt file is essential for controlling how search engine bots crawl and index your website. Grigora provides an easy way to customize this file to manage bot access.

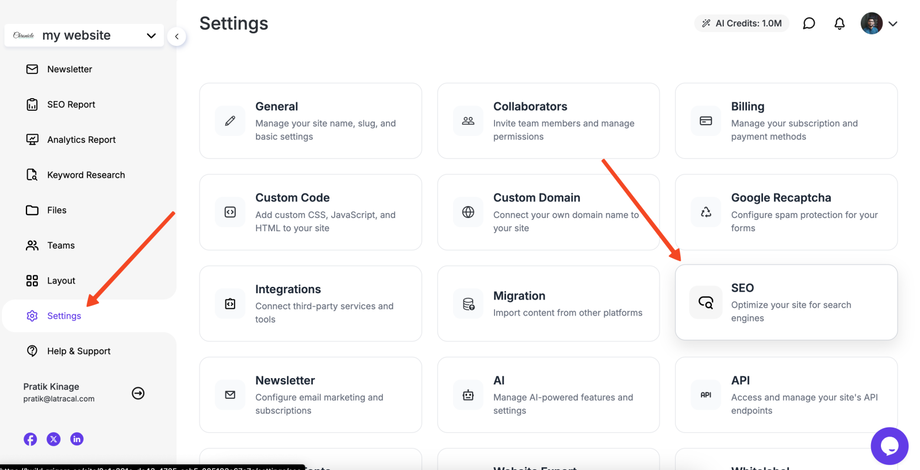

Step 1: Navigate to SEO Settings

-

Log in to your Grigora dashboard and select the website you want to edit.

-

In the left-hand sidebar menu, click on Settings.

-

On the Settings page, click on the SEO option.

Step 2: Update the robots.txt Field

-

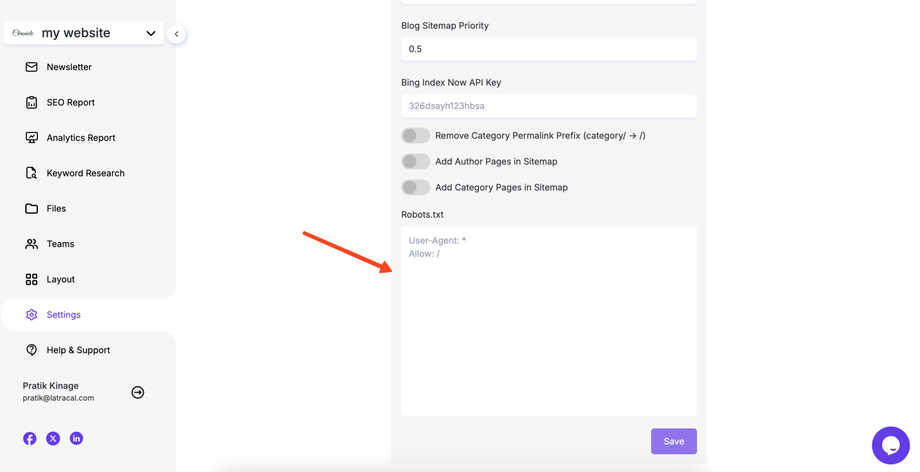

On the SEO settings page, locate the Robots.txt field.

-

In the text area provided, enter or paste your desired

robots.txtrules.-

Tip: If you need to allow full access to all bots, you typically use:

User-agent: * Allow: / -

If you need to prevent all bots from crawling the entire site, you would use:

User-agent: * Disallow: /

-

-

After making your changes, click the Save button.

Step 3: Publish Your Website

-

To ensure your new

robots.txtfile is live, you must Save and publish your website again. -

Once published, search engine bots will read and adhere to the updated rules.

Caution: Incorrectly configuring your robots.txt file can prevent search engines from crawling and indexing your entire site, which can severely impact your SEO. Always double-check your syntax before publishing.